Monday, 31 May 2010

Experience Design II Show at Penrhyn Road

Scarface AR by Nick Irons

Painting with Wii by Jaspreet Deusi, Nico Stromnes and Matt Steggles

Jelly Bellyicious by Siobhan Sawyer, Schinel Outerbridge and Angela Boodoo

Set the Bird Free by Jeff Townsend and Jamie Buckley

Thursday, 27 May 2010

Technical specification - making it all come together

As we said earlier, we discovered that we could not get movies (least not the way we made them) to function in Processing because QuickTime was the only video format supported. This was a problem because although one half of the team was using a Mac where this would all work, the other half was using a PC. After investigation we found that QuickTime was not supported on Linux, but was supported on Windows. However, the Bluetooth driver that would work with the Wii Remotes would only work with Microsoft's Bluetooth interface and not the Toshiba interface that the PC had. In the end we re-shot the whole thing as stills, then cut out the images to make them transparent PNGs. This gave us greater flexibility when importing the series of images into Processing, for example:

We then concentrated on saving all our image elements into Processing to build up the scene.

The Processing sketch would have four different states, reflecting the four possible threads through the story.

- KITTEN_CHASING_BIRD

- BIRD_IN_CAGE

- KITTEN_CAUGHT_BIRD

- BIRD_SAVED

The setup() method was used to initialize all the variables required during the sketch and also to load all the images and sounds that would be used.

During development it became clear that a number of the "actors" in our story had similar properties and abilities. For example: the bird and the cage are each assigned to a Wii remote and could be moved independently. In order to reflect this, a class "Actor" was defined that encapsulated the common properties between all of these.

An "Actor" had:

- a position on-screen

- an optional Wii remote assigned to it

An "Actor" was able to:

- detect whether it was near another Actor

- chase another actor

- adjust its position on input from the Wii remote assigned to it

We then identified two variants of these actors: Static and Animated. Static Actors were represented by a single image, whereas Animated Actors were represented by a continuously displayed set images. This Animated Actor was our way of making Processing animate without using the QuickTime library that was not supported on all platforms as mentioned above.

Once we had put these classes in place, the Processing draw() function was simply a matter of detecting whether different Actors were near each other and then altering the state of the sketch to match. For example: if the kitten is near the bird, then the game ends; if the bird is near the cage, then go to the next stage; if the bird in the cage is near the window, go onto the final scene.

The final scene, where the bird flies into the sunset calls on another class Bird, which stores a position and whether the wings are up or down. There are also various functions for drawing a Bird object. This code came from an earlier prototype where we had multiple birds flying around the screen. However, the story for our sketch only called for one bird so that is what we used.

Narrative - what's the point?

As far as advancing the narrative we thought it would be good to involve another "player", and that is when Jeff struck upon the idea of using Wii Remotes. It was now a question of developing the story to give the players some kind of purpose or reason to be engaging with the bird cage.

First, we separated the two images – one was of the bird, the other was of the cage – and gave control of each of the images via the Wii Remotes. Then Jeff struck upon the genius idea of introducing the villain of the piece – the Killer Kitten! We were to try and get the bird on the cage while avoiding being caught by the kitten, thus introducing an element of gameship and requiring some skill. The instructions will be published on card next to the finished piece, as below:

If you managed to get the bird into the cage before the kitten "pounced" on it, then the bird would be safe inside the cage, out of harm's way. (If not the screen turns red!)

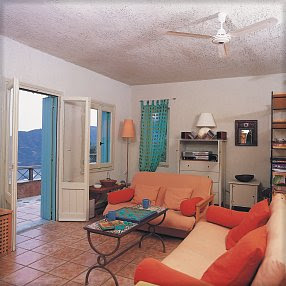

We would then move the scene on to a room where you, as the remote controller, could release the bird. This came about with the use of an open window chosen in our scene.

The player(s) are then rewarded by seeing the bird fly off into the sunset!

So we have set up a potential conflict, heightened the risk, then built in a resolution.